Are you looking to use the SadTalker API for your project or application? By using it, you can easily integrate SadTalker’s powerful capabilities into your applications.

I’ve simplified this process for those who want to explore SadTalker on their PC or laptop.

What Is SadTalker?

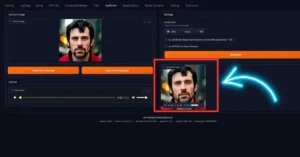

SadTalker is an impressive project that combines a single portrait image with audio to create a talking head video. It’s like giving speaking ability to the static images. The project aims to generate realistic 3D motion coefficients for stylized audio-driven single-image talking face animation.

How to Use SadTalker AI API?

Step 1: Installation:

- Ensure you have Docker and NVIDIA drivers installed.

- Download the SadTalker model from the provided address and store it in the appropriate directory.

- Create necessary directories for model files (checkpoints and gfpgan weights).

Step 2: Run the Container Service:

- Start the SadTalker container using Docker Compose.

- The interface address will be localhost:10364.

Step 3: Access the API Documentation:

- Visit localhost:10364/docs in your web browser.

- You’ll find detailed documentation on how to use the API.

Generate Talking Face Videos:

Use the API to input text and get an output talking face video.

The API endpoint for generating videos is localhost:10364/generate/.

You can make a POST request with the following JSON:

data:{

"image_link": "URL_TO_YOUR_IMAGE",

"audio_link": "URL_TO_YOUR_AUDIO"

}

Replace URL_TO_YOUR_IMAGE and URL_TO_YOUR_AUDIO with actual image and audio links.

How to use Sadtalker Replicate API?

1. Installation

let’s get the Node.js client installed. You can do this easily using npm:

npm install replicateGreat, now that we have the package installed, let’s set up authentication. You’ll need your API token for this.

If you haven’t already, grab your API token from your account settings on Replicate.

Once you have your token, set it as an environment variable:

export REPLICATE_API_TOKEN=<paste-your-token-here>2. Running the Model

Now, let’s running the SadTalker model. Here’s a code snippet to get you started:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"cjwbw/sadtalker:3aa3dac9353cc4d6bd62a8f95957bd844003b401ca4e4a9b33baa574c549d376",

{

input: {

source_image: "https://example.com/path/to/file/source_image"

}

}

);

console.log(output);

This code initializes the SadTalker model, passing in your API token for authentication. Then, it runs the model with the specified source image.

3. Specifying Input Parameters

SadTalker API provides various input parameters to customize your results. Here’s a quick rundown:

- source_image: Upload the source image (e.g., video.mp4 or picture.png).

- driven_audio: Upload the driven audio, accepting .wav and .mp4 files.

- enhancer: Choose a face enhancer (

gfpganorRestoreFormer). Default isgfpgan. - preprocess: Specify how to preprocess the images (

crop,resize, orfull). Default isfull. - ref_eyeblink: Path to reference video providing eye blinking.

- ref_pose: Path to reference video providing pose.

- still: Boolean indicating whether to crop back to the original videos for full body animation when preprocess is set to

full.

4. Webhook Integration

You can also specify a webhook URL to be called when the prediction is complete.

Here’s an example:

const prediction = await replicate.predictions.create({

version: "3aa3dac9353cc4d6bd62a8f95957bd844003b401ca4e4a9b33baa574c549d376",

input: {

source_image: "https://example.com/path/to/file/source_image"

},

webhook: "https://example.com/your-webhook",

webhook_events_filter: ["completed"]

});

5. Output Schema

The output of the SadTalker model follows a raw JSON schema, described as follows:

{

"type": "string",

"title": "Output",

"format": "uri"

}

SadTalker Project Details

- Project Source: SadTalker on Replicate

- Model: Provides realistic 3D motion coefficients for talking face animation.

- API: Transforms SadTalker into a Docker container with a RESTful API.

- Improvements: This version runs 10 times faster than the original SadTalker.

- TTS Integration: Includes an open-source TTS service for generating audio from text.

Remember that the use of the model is subject to the original developer’s license usage. Feel free to explore and create your own talking face animations with SadTalker.

- How to Use SadTalker AI Tool (Stable Diffusion’s ComfyUI)

- How to create AI talking avatar from photo using InVideo AI?

- Animaker AI Cartoon Maker

- How to make an AI talking avatar of yourself for Free?

Demi Franco, a BTech in AI from CQUniversity, is a passionate writer focused on AI. She crafts insightful articles and blog posts that make complex AI topics accessible and engaging.