Let’s explore the difference between SadTalker and Wav2Lip, two AI technologies related to audio-driven talking face animation.

SadTalker vs. Wav2Lip: A Comparative Analysis

1. Wav2Lip

Wav2Lip is an impressive lip-syncing model that generates realistic lip movements from audio input. It has gained popularity for its ability to synchronize lip movements with spoken words, making it useful for applications like dubbing and video editing.

Methodology:

Wav2Lip employs a deep learning architecture that combines visual features extracted from video frames with audio features from the corresponding speech signal. The model learns to predict accurate lip shapes based on the audio input.

Key Features:

- High-Quality Lip Sync: Wav2Lip produces lip movements that closely match the audio, resulting in convincing lip-sync animations.

- Stability: It provides stable results across various audio inputs and video contexts.

- Widely Used: Wav2Lip has been widely adopted in the research and creative communities.

Limitations:

Dependency on Clear Audio: Wav2Lip performs best when the audio quality is clear and noise-free.

Single-View Input: It relies on a single image or video frame as input, which may limit its accuracy.

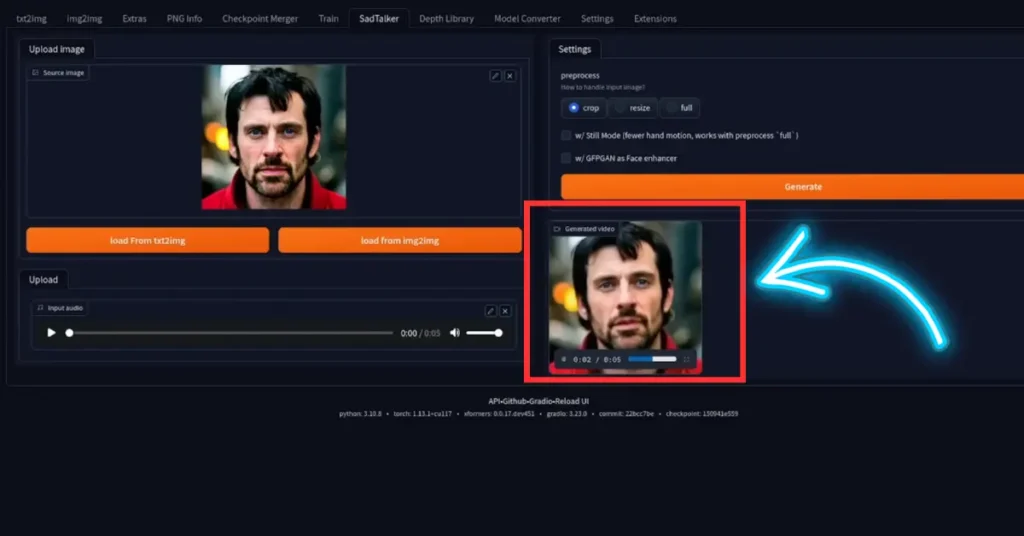

2. SadTalker

SadTalker is a novel approach that takes lip-syncing to the next level. Developed by the OpenTalker team, SadTalker aims to create stylized and expressive talking face animations.

Methodology:

SadTalker leverages 3D motion coefficients to generate realistic lip movements. It focuses on capturing nuanced expressions and stylized features.

Key Features:

- Stylized Animation: SadTalker goes beyond mere synchronization and introduces artistic flair to talking face animations.

- Learning 3D Motion Coefficients: By learning motion coefficients, SadTalker achieves more expressive and dynamic lip movements.

- CVPR 2023: SadTalker was presented at the Conference on Computer Vision and Pattern Recognition (CVPR) in 2023.

Limitations:

- Complexity: SadTalker’s approach involves additional complexity due to 3D modeling.

- Resource Intensive: Training SadTalker may require substantial computational resources.

Summary Comparison

| Aspect | Wav2Lip | SadTalker |

|---|---|---|

| Methodology | Visual-audio fusion | 3D motion coefficients |

| Quality | High-quality lip sync | Stylized and expressive |

| Usage | Dubbing, video editing | Artistic talking face animation |

| GitHub Link | Wav2Lip | SadTalker |

Both Wav2Lip and SadTalker contribute significantly to the field of audio-driven animation. While Wav2Lip excels in accurate synchronization, SadTalker adds an artistic touch to the mix. Researchers and creators can choose the one that aligns best with their specific requirements.